As a developer, I never cared much about Data Lake. While I’ve always sort of been responsible for the full stack, including the database stuff, I tended to shy away from stuff that had too much to do with data. I’ve been working with some interesting customers recently, though, that have interesting needs when it comes to their data.

You see, in recent years, something has happened to our data – all of it is_ potentially_ interesting. We may not know it yet, but all data has potential value. The data is also growing extensively. Seven (!) years ago, we’ve already reached the milestone of producing more content every two days than we, as a civilisation, produced up to that point in history. There are some interesting reads on this, if you're curious.

One thing that caught my attention especially, being an amateur photographer, is that Data Lake Analytics lets you run diverse workloads, such as ETL, Machine Learning, Cognitive and others. Now, that had me thinking…

The Food Map

I’m a coeliac. I was diagnosed as having a gluten allergy – “and not the hipster kind” was basically what my doctor said, followed immediately by: “but you’re allowed to have steak, wine, whiskey and coffee, so you’ll be alright”. Good advice… 😊 Now, back to the problem at hand. Since the diagnosis, I’ve had to start changing the diet. It usually goes OK, as most places cater for the allergy, but sometimes there have been massive fails, for example I am never eating a Domino’s Pizza ever again. That was a horrible waste of three days of my life. Since those issues, I’ve started keeping a food diary. Like a hipster, I’ve taken a photograph of mostly all the meals I’ve eaten, especially if I eat them outside. Now, to be fair, it started because some of the food I was having was amazingly delicious (thanks Stuart, for the sushi in Seattle).

Like a customer I was working with, I now had a big collection of photos. Granted, they have several Petabytes, and mine still fits on my iPhone, but nevertheless the situation is almost the same. So, in the mentality of Big Data, all data has potential. So, what can I do with this?

So, I’ve decided to put all the photos in a Data Lake Store (the storage, powering the Azure Data Lake offering), and write a simple U-SQL query that looks at the photos, and using computer vision (cognitive services), figures out if it’s a picture of food. If it is, get the GPS location where it was taken.

Step 1: Setup the ADLA/ADLS accounts

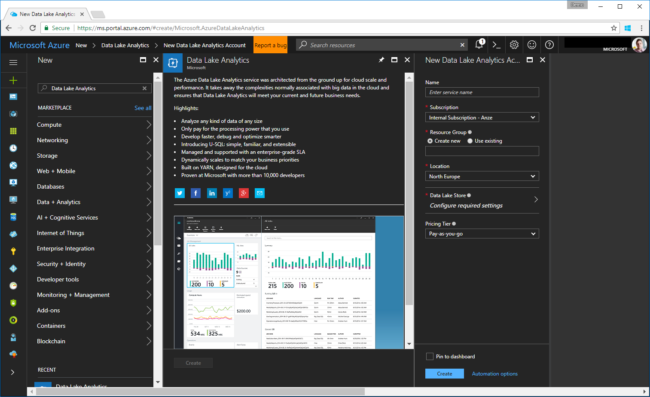

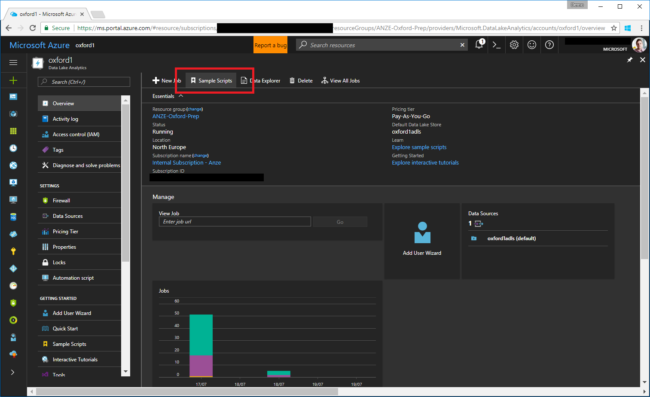

First things first, I had to create an Azure Data Lake Analytics (ADLA) instance in my subscription. That was the easiest part.

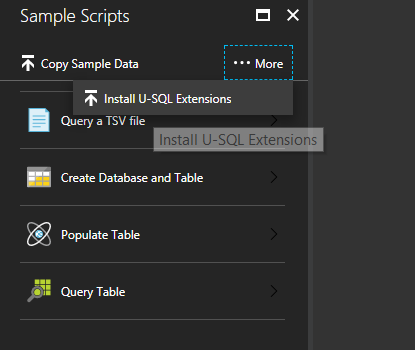

Next up is enabling the Sample Scripts as per the "documentation". Of course it doesn't mention how to get the reference assemblies anywhere in the "documentation", which meant lots of Googling. At one point, someone wrote that they are added as you add the Sample Scripts. Then clicking on the More tab and selecting Install U-SQL Extensions .

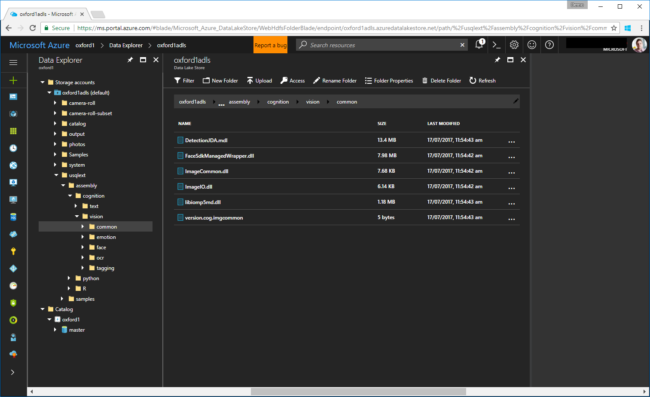

This results in some interesting DLLs getting installed in our store. I had a conversation with one of the (awesome) Cognitive Services PMs a while ago, and these have the same capabilities as the full-on APIs that we offer, just a different access (obviously, this isn't accessed via REST APIs).

For my experiment to work, then, the thing I needed was actually the Image Tagging algorithm. Sadly, when I tried to run the sample in the documentation, it failed with an internal exception. To test this out, I resorted to the other example, which is to use the Face Detection DLLs. I knew they work - the script to test this is here.

Step 2: Implementing the Custom Image Tagger

Now that I had the environment setup, and I knew the Cognitive stuff works, just not for my specific requirement, I figured I might as well try and do it differently. So I did what any developer would do, downloaded the DLLs and had a peek inside it using dotPeek. I then wrote my own custom implementation of the IProcessor interface that is used to extend U-SQL. U-SQL, btw, is the language you (can) use to interact with ADLA.

I was looking at the implementation provided, and decided I'll do it a bit differently. This is the core of the implementation:

public override IRow Process(IRow input, IUpdatableRow output)

{

var tags = this.tagger.ProduceTags(input.Get<byte[]>(this.imgColName));

var stringOfTags = string.Join(";", tags.Select(x => string.Format("{0}:{1}", x.Key, x.Value)));

output.Set<int>(this.numColName, tags.Count);

output.Set<string>(this.tagColName, stringOfTags);

return output.AsReadOnly();

}

The Process method get each row that ADLA is processing, along with an output that is an IUpdatableRow. All we do in there is get the byte array of the image, and call the ProduceTags method in the "Tagger". Have a look at the full implementation here.

To implement this, I've opted for a custom Class Library (For U-SQL Application), in my Visual Studio Solution. To use it though, you have to register it first. That involves a few steps:

- Right click on the project, and select Register Assembly...

- Make sure you select the right account; this got me the most times...

- I opted to also deploy Managed Dependencies (specifically ImageRecognitionWrapper ), directly from the existing set in the cloud. The path will look something like this adl://oxford1adls.azuredatalakestore.net/usqlext/assembly/cognition/vision/tagging/ImageRecognitionWrapper.dll.

- Remember to tick Replace Assembly if it already exists. You will forget it, and it will fail.

Now then, time for our first run.

How did it do? Let's take a look at one of the photos:

That's some mighty good sashimi, we had with a colleague in Seattle. The output produced by ADLA for this photo is, formatted for readability:

**Tag****Confidence (0-1)**food0.9370058table0.906905plate0.8964964square0.6789738piece0.6518051indoor0.6433577dish0.9999959sashimi0.961922

It's worth noting that I've added this line of code to the tagger implementation:

if (!results.ContainsKey(result.name) && result.confidence > _threshold)

I've set the default threshold to 0.5, which means I only get the tags the algorithm is fairly confident in. It works for me. Another thing that's interesting is the confidence level in sashimi. That's just amazing.

Step 3: Filtering the ones with food

Now that we have our computer vision sorted, we need to find only the photos that have food in it. I used the U-SQL extensibility for this, again, and implemented a simple C# code-behind function:

namespace ImageTagging

{

public class Helper

{

public static bool HasTag(string tag, string column)

{

column = column.ToLower();

tag = tag.ToLower();

return column.Contains(tag);

}

}

}

I know it's basic, but it fine for our POC. I can call this from my U-SQL statement, like so:

@combined = SELECT m.FileName AS FileName, m.image_lat AS ImageLat, m.image_long AS ImageLong,

r.NumObjects AS NumObject, r.Tags AS Tags

FROM @metadata AS m

INNER JOIN (SELECT * FROM @results) AS r

ON r.FileName == m.FileName

WHERE ImageTagging.Helper.HasTag("food", r.Tags);

So, the above statement comes from a bit further down the road, but it essentially is a SQL WHERE statement. The difference is it doesn't compare anything, but calls out the above C# function (which returns a boolean). How cool is that?

Step 4: GPS location extraction

I now had the photos analysed, and I was confident I can get the ones with food. So, next step, I was interested in the location. These days, most of the photos taken have GPS coordinates embedded in their EXIF metadata. From working with another customer, I already knew where to look and how to get to it. It involved copying some code from a U-SQL sample repository. That gave me some fun capabilities of dealing with the images, including a pointer on how to deal with metadata. However, as it turns out, the GPS coordinates are stored a bit differently - they are stored in multiple properties (long/lat as you'd expect, but each of them also has a "reference" field, which basically describes orientation from what I gather). Through the magic of Stack Overflow I was able to get to a working implementation of this:

public static string getImageGPSProperty(byte[] inBytes, int propertyId, int referencePropertyId)

{

using (StreamImage inImage = byteArrayToImage(inBytes))

{

var prop = inImage.mImage.PropertyItems.Where(x => x.Id == propertyId).Take(1);

var propRef = inImage.mImage.PropertyItems.Where(x => x.Id == referencePropertyId).Take(1);

if(!prop.Any() || !propRef.Any())

{

// abort

return null;

}

//return ExifGpsToFloat(prop.Single(), propRef.Single()).ToString();

return ExifGpsToDouble(propRef.Single(), prop.Single()).ToString();

}

}

private static double ExifGpsToDouble(PropertyItem propItemRef, PropertyItem propItem)

{

double degreesNumerator = BitConverter.ToUInt32(propItem.Value, 0);

double degreesDenominator = BitConverter.ToUInt32(propItem.Value, 4);

double degrees = degreesNumerator / (double)degreesDenominator;

double minutesNumerator = BitConverter.ToUInt32(propItem.Value, 8);

double minutesDenominator = BitConverter.ToUInt32(propItem.Value, 12);

double minutes = minutesNumerator / (double)minutesDenominator;

double secondsNumerator = BitConverter.ToUInt32(propItem.Value, 16);

double secondsDenominator = BitConverter.ToUInt32(propItem.Value, 20);

double seconds = secondsNumerator / (double)secondsDenominator;

double coorditate = degrees + (minutes / 60d) + (seconds / 3600d);

string gpsRef = System.Text.Encoding.ASCII.GetString(new byte[1] { propItemRef.Value[0] }); //N, S, E, or W

if (gpsRef == "S" || gpsRef == "W")

coorditate = coorditate * -1;

return coorditate;

}

To use this, I needed to go back to the U-SQL script:

DECLARE @image_gps_lat = 2; // the ID of the EXIF tag for the latitude

DECLARE @image_gps_long = 4;

@metadata =

SELECT FileName,

CustomImageTagger.ImageOps.getImageGPSProperty(ImgData, @image_gps_lat, @image_gps_lat-1) AS image_lat,

CustomImageTagger.ImageOps.getImageGPSProperty(ImgData, @image_gps_long, @image_gps_long-1) AS image_long

FROM @imgs;

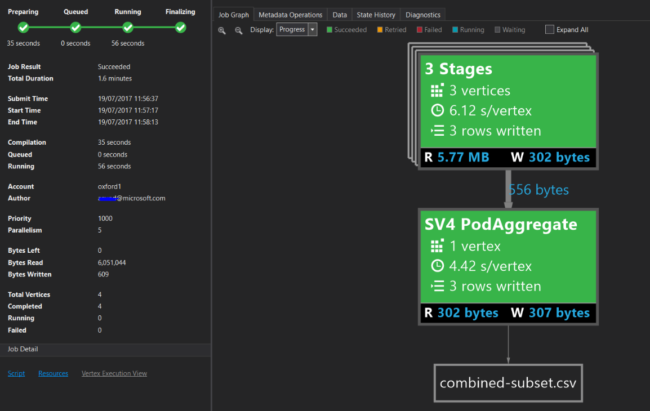

The U-SQL statement from a paragraph higher now comes into better perspective. We basically split the processing into two branches - one gets the GPS location, the other the tags, then we essentially join the two results together and filter only the ones we know have food as a tag. The result gets outputted into a CSV file - no, no reason, just to keep it simple.

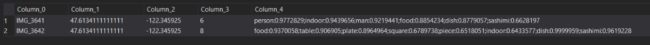

Running this, now gives us the following result (subset):

Now we're getting somewhere. The coordinates in there are correct, and correspond to Umi, in Seattle. Omnomnom.

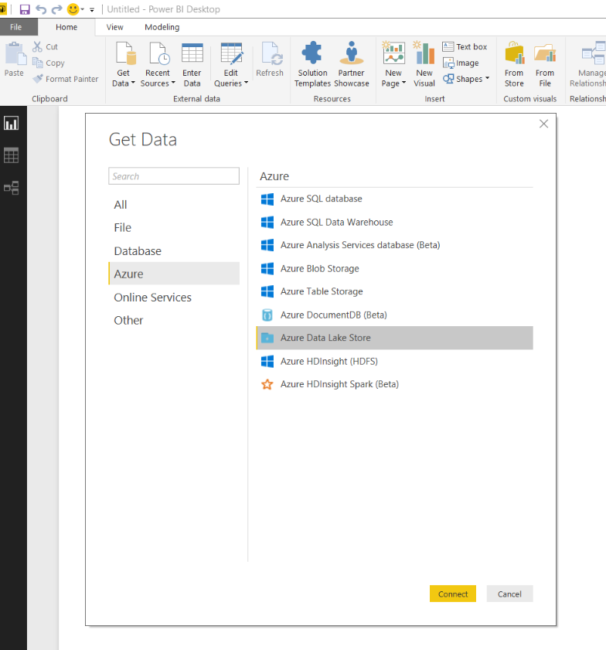

Step 5: Displaying the results on a map

All that is left, is to display the results on a map. I wanted to keep it simple, and just display a dot for each location I've eaten at. PowerBI seemed to be the obvious choice to do that. There's an easy way to get data from ADLS, but you need to use the desktop version of PowerBI.

You then provide the full URL to the CSV file that is outputted by the script, and do a bit of trickery. Running this for a subset of my photos produces the following map:

Conclusion

I've published all the code for this on GitHub. You should have enough between this post and that repository to get started. As for me, my next step is to upload a lot more of my photos, and continue improving the map. I'll also consider outputting the results into something else, though for now, that's not really a problem. The one thing I would love though, is to actually display the photo. Guess that'll be the next step.