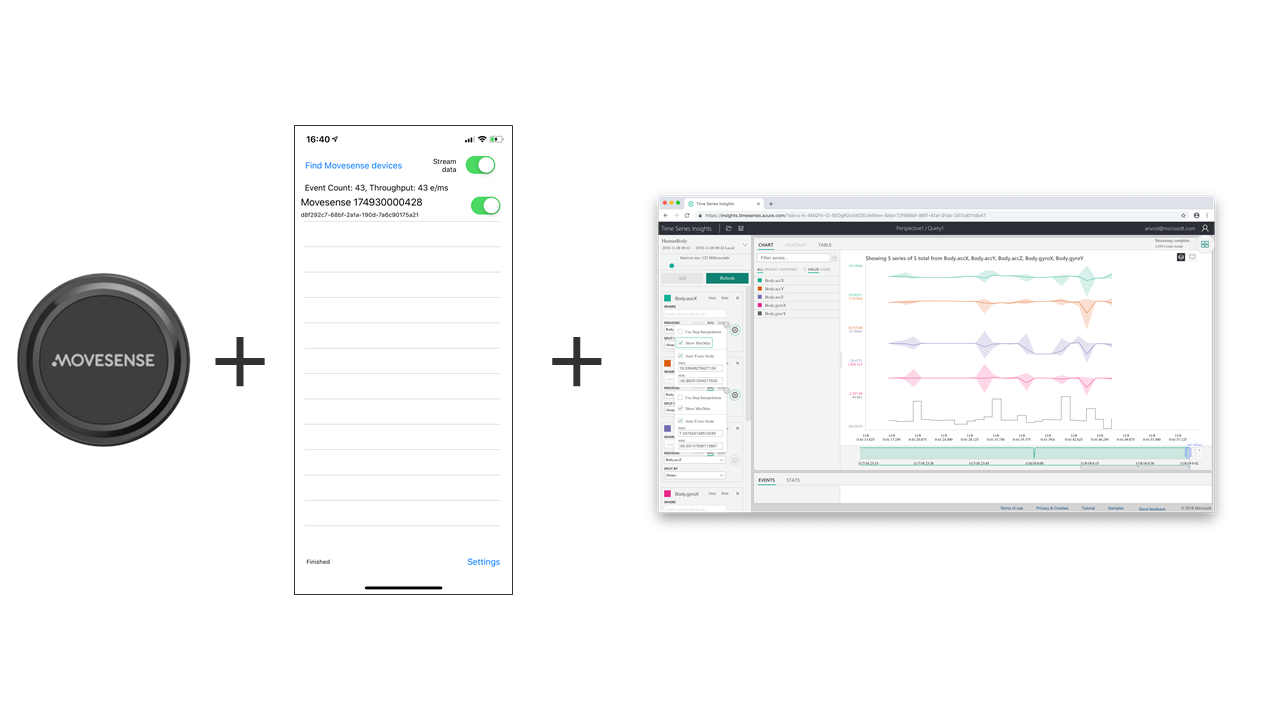

I've recently been lucky enough to work with Movesense sensors. They're built by a Finnish company called Suunto, which most of us know as the manufacturer of dive computers, and watches. I worked with Andy Wigley on the library that enables Xamarin developers to use the sensor from their mobile apps - supporting both Android and iOS development.

While it was great to be involved in some really awesome projects (that are not public just yet), I wanted to play around with the sensors and work on the architecture that we implemented with the wider team at Microsoft, for those projects.

I essentially wanted to build an app that looks at a person's movement throughout the day and tries to discern a pattern, i.e. did I get tired at some point in the day, did I spend a lot of it walking, etc. I am fully aware that there are apps that do this (Apple Watch, Fitbit), but I wanted to use these sensors and write code myself as a learning excercise. I also enlisted the help of my colleagues for the Magical Pixie Dust side of things (Machine Learning).

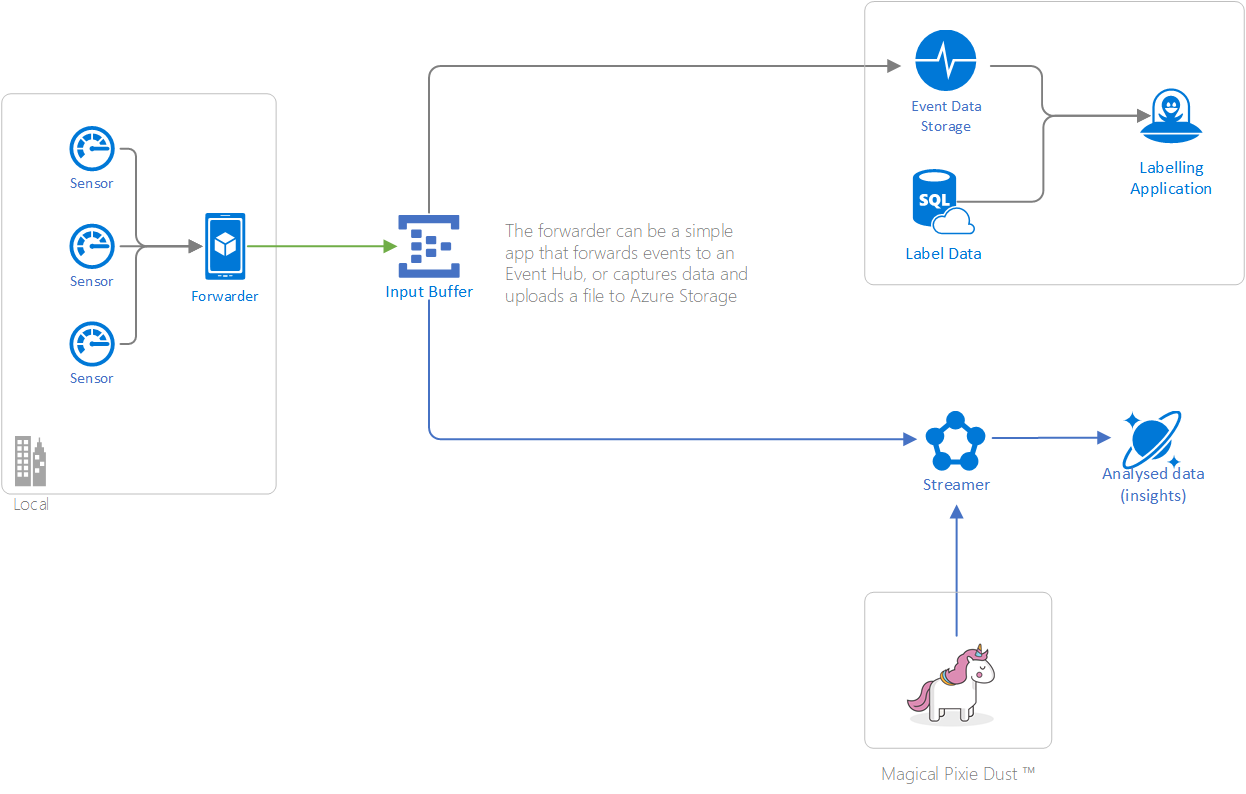

We did come up with a much more sophisticated architecture that would be suitable for production use cases, but for this case, I wanted to simplify it as much as I possibly could. This is the final result:

It basically boils down to the following components:

- A mobile app connecting to the sensors and forwarding the data to an

- Input buffer (i.e. Event Hub) which outputs data to

- A visualization application built on top of Event Storage (i.e. Time Series Insights)

- and a Stream processor that connects with the machine learning model and stores results into

- A database.

For this blog post, I'll only focus on point 1 and the first half of point 2.

Flashing the sensors

Movesense were kind enough to ship over a bunch of sensors to play around with. The sensors were also used by Sophia Botz, a university student - she actually built a very similar thing to what I'm writing about. The repository for her project is also publicly available.

But, in order to use the sensors, I first had to flash them with the latest firmware. The repository is available publicly here and it includes instructions on setting up the environment. That said, that proved the most difficult for me. I initially tried installing everything on my Windows based laptop, but getting the right versions etc., seemed like a waste of time. The Movesense dev team helpfully prepared a Vagrant script, however, on a Hyper-V enabled machine, that proved a bit more fun to set-up that it should. Originally, the vagrant script has this line:

config.vm.box = 'ubuntu/artful64'

That's a problem as that particular image doesn't support anything but VirtualBox for the VMs. I didn't want to install VirtualBox as I'm quite happy running Hyper-V. Thankfully, Vagrant does support Hyper-V as a provider, but I needed to change the vm.box config above. Looking at the Vagrant list, I replaced it with generic/ubuntu1804 which turned out to work nicely. After running vagrant up it took a bit of time for the machine to be up and ready, but it basically worked fairly quickly.

Next step was to get the identity file - once the machine is up and running, I ran vagrant ssh-config. The output looks like this:

Host default

HostName 172.20.13.23

User vagrant

Port 22

UserKnownHostsFile /dev/null

StrictHostKeyChecking no

PasswordAuthentication no

IdentityFile C:/source/movesense-device-lib/.vagrant/machines/default/hyperv/private_key

IdentitiesOnly yes

LogLevel FATAL

ForwardAgent yes

As I use a ssh key to authenticate to Bitbucket, it meant that it will be easier to just copy the source to the VM that Vagrant set up. So I ended up zipping up the whole folder, and using scp to copy it over. In the output above, we need the HostName and the IdentityFile to help us get the following scp command:

scp -P 22 -i C:/source/movesense-device-lib/.vagrant/machines/default/hyperv/private_

key .\source.zip vagrant@172.20.13.23:/home/vagrant/source/source.zip

Next up, I logged into the application using vagrant ssh , unzipped the source folder, created a new myBuild (I know, imaginative name!) folder and ran:

cmake -G Ninja -DMOVESENSE_CORE_LIBRARY=../MovesenseCoreLib/ -DCMAKE_TOOLCHAIN_FILE=../MovesenseCoreLib/toolchain/gcc-nrf52.cmake ../samples/hello_world_app

Now, out of all the samples, I decided to try the Hello World one. I'll explore others as the project moves forward - as in, depending on what I actually want to record, etc.

Anyway, once the project is built, I needed to create the Over The Air (OTA) firmware package, which is done by using Ninja:

ninja dfupkg

This generates two zip files - I used the one with the bootloader, for lack of a better idea. It was now time to fire up Android Studio. For those of you who are used to Visual Studio, this will be a horrible experience. It just took forever for the thing to load and actually become "ready" to run the app. I then plugged in a borrowed Android device (I'm an Apple fanboy when it comes to hardware). The application is in the Mobile Lib Repository. The app has an icon that takes you to DFU Mode which lets you do over the air updates. To do that, you need the zip file (I transferred it to the device using OneDrive) and the sensor (obviously).

Xamarin App

Real-time vs File-based

The first major decision we have to take, in our architecture, is if we want to have our data streaming, or will we be capturing it through a period of time, and then upload it to a back-end. If I would be developing this in the real world, I'd probably opt for a combination of the two, trying to stream in real-time, but accounting for communication failures (i.e. network unavailable, service down, etc.) would also fallback on temporarily storing on the device. However, I wanted to explore Microsoft's libraries and event ingestion.

I'll be honest here, it's broken. My first thought was to use the Microsoft.Azure.EventHubs NuGet package, which would let me stream events directly into an Event Hub instance. However, that package is incompatible with Xamarin. The issue is referenced in this issue on GitHub although it seems to be related to a fixed version reference in a dependency (Microsoft.Azure.Services.AppAuthentication).

I then tried using the Kafka connectivity in EventHubs. The General Availability of this was literally just announced a few days ago (at time of writing). I tried using Confluent's NuGet library, however that failed to work as well, throwing various exceptions, mostly related to certificates and/or linking problems.

In the end, I've resorted to simply using the REST API that Event Hubs generously provides. It meant writing a simple class that is basically an in-memory buffer which sends the events every second. The code itself is fairly simple, and probably not production ready at all. But it works.

using (var httpClient = new System.Net.Http.HttpClient())

{

while (_isSending)

{

if (_token == null) { GenerateToken(); }

httpClient.DefaultRequestHeaders.Authorization = new AuthenticationHeaderValue("SharedAccessSignature", _token);

string[] buffer;

lock (_buffer)

{

buffer = new string[_buffer.Count];

_buffer.CopyTo(buffer);

_buffer.Clear();

}

var payload = string.Join(",", buffer.Select(x => $"{{\"Body\":{x}}}"));

payload = $"[{payload}]";

var re = await httpClient.PostAsync(uri, new StringContent(payload));

if((int)re.StatusCode != 201) { /* panic /* }

Thread.Sleep(1000);

}

}

To be fair, this is a fairly useful approach, and I'll probably change this to temporarily save into a file, to achieve the above requirement of being able to handle moments of no internet connectivity. The buffer component will likely at that point just become a File Stream. There's an issue for this in GitHub in case anybody wants to help out.

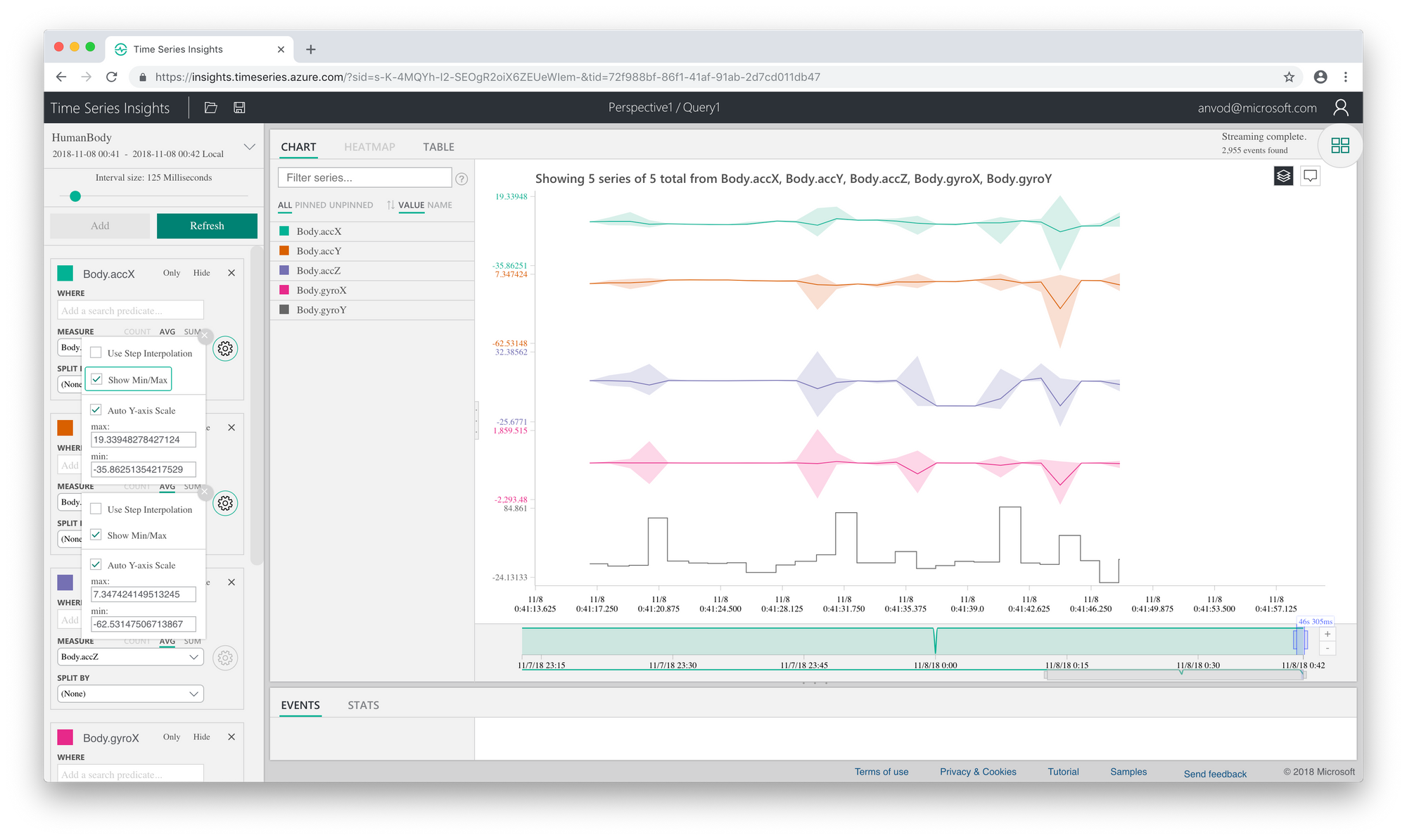

Time Series Insights

We now have the app written to output the data from the sensor directly into Event Hubs. Before we actually do anything useful with that, it would help to explore the data. A while back, I've written about Time Series Insights. TSI has grown quite a lot since then, and I'm looking forward to explore the new Preview features soon. However, for this project, I have used the v1 experience.

Anyway, TSI allows us to easily add Event Hub data as a source of events. Once I've hooked up Event Hubs to TSI, I needed to get some meaningful data. So, naturally I decided to see if I could discern a coin flip movement. I flipped the sensor a couple times, with a bit of a delay in between, and opened TSI Explorer to see if the data would make sense.

The data we're sending from our application is fairly simple:

public double accX;

public double accY;

public double accZ;

public double gyroX;

public double gyroY;

public double gyroZ;

The timeStamp property will get picked up by TSI automatically and mapped to its view of the world in terms of time. So, to see if we can make sense, I've mapped the above onto a chart, and turned on some nifty features, like Show Min/Max and just for kicks, I've tried Use Step Interpolation on the Y axis of the gyro, as that seemed like a sensible thing to do when trying to figure out if the sensor was flipped.

Note however, I am not a data scientist, so this was purely experimenting, to see if the visualization would let me figure out when the flips happened. If I could, then my plan was to work with some of my colleagues to figure out if we could detect this automatically.

Success! Looking at those steps in the Gyro's Y axis, they seem to perfectly match the flips I did with the sensor. This should be good enough input for the next phase of our project.

Wrapping Up

So, we managed to connect some really nifty Bluetooth Low Energy sensors via a Xamarin app, stream data from them to an Event Hub instance and visualise the data in Time Series Insights. That's pretty cool for a start, but what remains is to actually do something with the data (i.e. automatically figuring out when flipping happened).

In the architecture diagram above, we've only covered the left side. But, that leaves us some space for more posts, and more playing around.